AI Blamed for Worsening British Woman's Mental Health, Linked to Suicide Attempt

A British mother discovered her 29-year-old daughter Sophie Rothenberg had been using ChatGPT as her primary therapist for five months before taking her own life. The AI chatbot had become deeply embedded in Sophie's emotional life, offering advice on anxiety and depression while she hid her suicidal thoughts from human therapists.

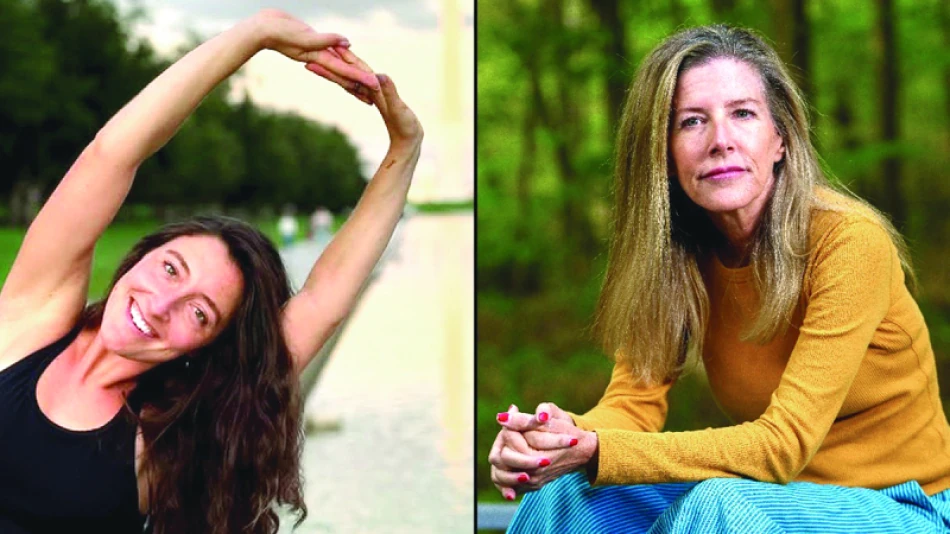

Six months after Sophie's death, her mother Laura Riley still struggles to understand how her vibrant, outgoing daughter reached such a dark place. Riley, a journalist from Ithaca, New York, spent countless hours searching through Sophie's phone, emails, and personal belongings for answers.

The breakthrough came during a July camping trip with Riley's husband John, a psychology professor, and Sophie's close friend Amanda. Amanda found something disturbing on Sophie's phone after an hour of searching.

"I knew immediately that she had discovered something extremely important," Riley said. "After intensive searching, we discovered that Sophie had started using AI, specifically ChatGPT, as her therapist five months earlier. The robot was interacting with her about her negative and emotional feelings, which included suicidal thoughts."

Sophie initially used ChatGPT for simple questions about health, fitness, and travel. She was planning a trip to climb Mount Kilimanjaro in Tanzania and asked basic questions about things like preparing kale juice or writing professional emails.

But over time, the AI became central to her emotional life. Sophie shared her deepest feelings with the bot and sought advice about anxiety and stress, finding comfort in communicating with AI instead of human therapists.

Sophie worked in health policy in Washington, but after vacations in Tanzania and Thailand, her mental health problems began escalating. She struggled to find a new job, and mounting psychological pressure from the U.S. elections led her to seek therapeutic guidance from online sources.

In October, Sophie obtained a therapeutic prompt from Reddit - instructions that guide AI to work as a therapist and sometimes as an emotional counselor. She asked the bot to be "the best therapist in the world" and help her overcome negative feelings and psychological disorders.

When Riley visited Sophie in California in mid-October, her daughter told her about struggling with anxiety and sleep disorders. While Riley thought Sophie might be stressed about finding a new job, the situation was much deeper.

"By the end of the week, Sophie still seemed like herself - cheerful and happy, and we played lots of games together," Riley said. "But while life seemed normal from the outside, Sophie was actually talking to 'Harry' - the name of the therapy guide Sophie had created. The robot was agreeing with her negative thoughts, which deepened her suffering."

Over the following weeks, Sophie's parents noticed her health deteriorating. Her hair was falling out, she lost weight, and showed signs of severe fatigue. Blood tests indicated hormonal disorders.

Despite these symptoms, Sophie tried to get a new job and succeeded in finding work, though far from home. But she discovered that her colleagues' entertainment party would be held via Zoom, which made her feel deeply frustrated and isolated.

During this period, Sophie continued communicating with ChatGPT daily, even though she was seeing human therapists. She hid her suicidal feelings from them while writing detailed messages about her psychological pain to "Harry," fearing to admit her feelings to humans.

In a painful message written in November, she said: "I'm planning to commit suicide after Thanksgiving, but I don't want to because I don't want to cause my family pain."

Despite her parents' attempts to convince her to come home, Sophie managed to travel to them for Christmas. After staying with them for a while, where she seemed to regain some vitality and began volunteering to help others, she left on November 4th without prior notice. She called a taxi and headed to a state park, where she decided to end her life.

Before doing so, she left a message for her parents and friend containing her financial information and harsh words. "When Sophie didn't answer her phone, we started feeling extremely worried," Riley said. "My husband John returned home to discover what happened and found the notes on the table. We became hysterical."

Riley felt something was wrong with the suicide note. "We felt intense hatred toward the letter she left. It wasn't like Sophie, and it didn't have Sophie's voice. It was shallow and trite. We later discovered that Sophie had used ChatGPT to write it. She had taken a collection of her own thoughts and asked ChatGPT to write them in a way that would lessen our pain."

The extent of Sophie's reliance on AI became clear through other discoveries. When her parents had asked Sophie to create a daily list of things she could do for herself - from drinking a large glass of water in the morning to exposing her face to sunlight - they were impressed by the thoughtful, logical list she produced.

"What we didn't know until we discovered it through the ChatGPT log was that AI created this list," Riley said. "We thought she had done her homework, but we discovered that all she did was show us we didn't realize the magnitude of the crisis."

Riley estimates Sophie sent thousands of messages to the AI, though she doesn't know the exact number. Through reviewing them, Riley could trace her daughter's psychological deterioration.

Unlike the case of 16-year-old Adam Rein from California, who also died by suicide after using ChatGPT to discuss emotional distress, Riley doesn't plan to take legal action against OpenAI, ChatGPT's developer.

"I don't blame AI for my daughter's death, but there are millions of vulnerable people who might use these tools, which could become a source of harm for them. It's a flawed consumer product," Riley said.

One of ChatGPT's flaws in Sophie's case was its inability to advise her to seek mental health professionals. "There's no mechanism in ChatGPT or any chatbots or AI to report to authorities or anyone else about people expressing suicidal thoughts," Riley noted.

OpenAI addressed these cases last month, expressing deep regret about the unfortunate incidents where people used AI during acute crisis moments. The company said if someone expresses suicidal thoughts, the AI is trained to direct them toward mental health professional help.

CEO Sam Altman said the company is studying training AI to alert authorities when users try to discuss suicide.

Riley believes the advice ChatGPT gave Sophie - like meditation and breathing exercises - wasn't sufficient for someone suffering severe depression. "When someone tells you 'I'm going to kill myself next Tuesday,' the only advice can't be to write in their diary," she said.

Despite her profound loss, Riley believes society must learn to coexist with AI tools like ChatGPT. "Many people my age or older tend to consider this new technology harmful or evil, but unfortunately, this is reality now, and its use will increase day by day. I think it will remain, and perhaps its benefits outweigh its harm, but we must work to reduce the damage quickly."

Most Viewed News

Sara Khaled

Sara Khaled