Artificial Intelligence Threat: Government Agency Warns Against Manipulated Imagery

Abu Dhabi Warns Against AI Photo Apps as Deepfake Threats Escalate

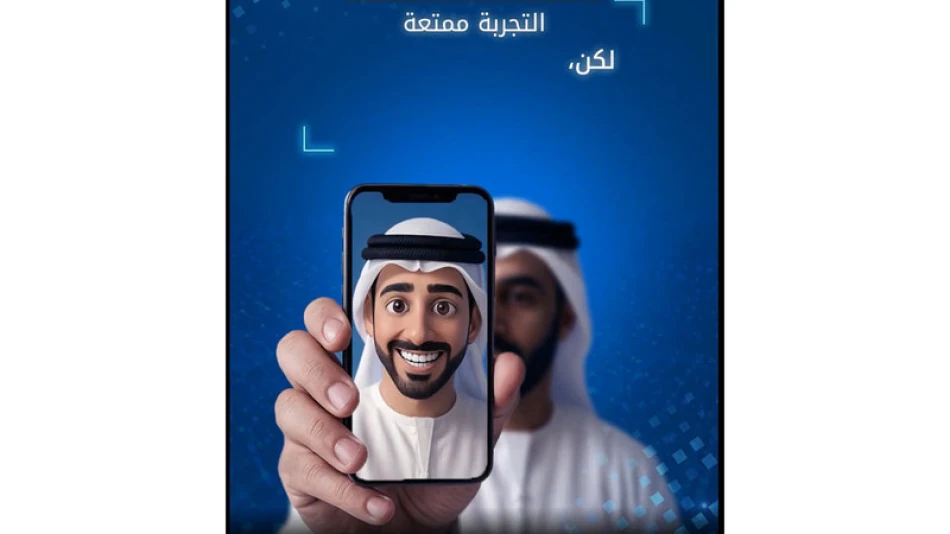

Abu Dhabi's Government Enablement Department has issued a stark warning about the hidden dangers of popular AI photo-editing applications, cautioning that users who upload personal images for cartoon filters or digital avatars are unknowingly surrendering sensitive biometric data that can fuel sophisticated fraud schemes and deepfake operations.

The Biometric Data Gold Rush

In an educational video posted on X (formerly Twitter), the department highlighted how a single photo upload teaches AI systems to map every facial feature and detail. What appears to be harmless entertainment—transforming selfies into cartoon characters or digital art—actually creates a comprehensive biometric profile that can be weaponized by bad actors.

The warning comes as AI-powered photo applications have exploded in popularity across social media platforms, with millions of users worldwide sharing AI-generated versions of themselves without considering the long-term security implications.

From Fun Filter to Fraud Tool

According to the department, uploaded photos can be exploited in several dangerous ways: creating fake social media accounts for catfishing or romance scams, executing sophisticated financial fraud using the person's likeness, and generating convincing deepfake videos that could damage reputations or spread misinformation.

This represents a significant evolution in digital fraud tactics. Traditional identity theft typically required stealing documents or personal information, but AI photo apps now provide criminals with high-quality biometric data voluntarily submitted by victims themselves.

Global Context: A Worldwide Vulnerability

Abu Dhabi's warning reflects growing international concern about biometric data security. The European Union's GDPR regulations classify biometric data as particularly sensitive, while countries like Singapore have implemented strict guidelines for biometric data collection and storage.

The UAE's proactive stance aligns with its broader digital transformation strategy, where rapid technology adoption must be balanced with robust cybersecurity measures. As a major financial hub, the Emirates cannot afford widespread digital identity compromise that could undermine trust in its digital economy.

Market Implications for AI Companies

This warning signals potential regulatory scrutiny for AI photo-editing applications, particularly those that lack transparent data handling policies. Companies operating in the Gulf region may face increased compliance requirements, while users may become more selective about which applications they trust with biometric data.

The development also highlights the growing value of biometric data as a commodity, suggesting that privacy-focused alternatives could gain market traction among security-conscious consumers.

Protecting Digital Identity

The department recommended several immediate protective measures: deleting unnecessary AI applications and any previously uploaded photos, restricting app permissions to prevent data access, limiting sharing of personal images on social platforms, and educating family members about potential risks.

These recommendations reflect a broader shift toward personal responsibility in digital security, where users must actively manage their biometric footprint rather than relying solely on platform protections.

The Deepfake Arms Race

Abu Dhabi's warning underscores how deepfake technology has democratized sophisticated fraud techniques. What once required expensive equipment and technical expertise can now be accomplished with consumer-grade AI tools and a single photograph.

This technological shift demands new approaches to digital verification and identity protection, potentially accelerating adoption of blockchain-based identity solutions and advanced authentication methods in the region.

Most Viewed News

Sara Khaled

Sara Khaled